A revolutionary governance reform of the past 30 years has been adoption of new quality standards and disciplines for government regulations. In particular, over 60 countries have adopted various forms of regulatory impact analysis (RIA) as a mandatory step in developing new legal norms. The rapid spread of RIA is comparable to the rapid spread of modern budgeting methods a century earlier to provide a new framework for assessing and managing the costs and benefits of government spending.[1] Given the high costs of off-budget government costs through regulation, the adoption of RIA can be seen as the “second step” in moving toward a more complete accounting of government costs, and better management of government services. It is the natural progression of the Regulatory State.

The full integration of RIA into policymaking is only a question of time, since RIA is currently the only feasible tool to help governments manage critical problems that threaten economic progress (such as the efficiency and effectiveness of the regulatory function of government, as well as the rising off-budget costs of government action).

Despite its popularity, the teething problems of RIA are immense. Regulatory impact analysis (RIA) is a vital government tool proven in some countries and evolving quickly as governments continually refine its design and application. In many other countries, though, RIA is still working through important implementation problems. One of the puzzles that RIA advocates face is the high rate of failure of RIA implementation. Every review of RIA practice across multiple countries that is based on evaluation of RIA content against clear quality standards finds that a majority of countries that have formally adopted RIA, even those with excellent RIA methods, have been unable to consistently produce RIA of sufficient quality to support better public policy decisions.

In country after country, RIA does not quantify enough impacts, does not define problems using market principles, and does not rigorously examine or compare a range of possible solutions. Quantification of benefits is a problem affecting the majority of RIAs in many countries. The national ritual of adopting a RIA mandate without actually producing anything that can properly be called RIA is depressingly familiar.

This chapter asks “why?” and focuses on a neglected but critical topic for those proposing the RIA approach: the classical RIA method itself. Intrinsic barriers to implementation are created by classical RIA methods as they are transferred to “new” RIA countries. This chapter argues that the classical RIA method is still poorly defined, unduly academic for many countries, and has not yet evolved into the practical approach needed for day to day implementation in real-life policy scenarios characterized by low skills, inadequate time, and poor access to data. In other words, we have not yet developed a RIA method that, for most countries, can be practically mainstreamed for the production of relevant and timely policy analysis.

It is argued here that the classical RIA method has not yet been well designed to provide the information that governments need to avoid making costly regulatory mistakes. Problems with the classical RIA method include:

- Open-ended questions: Classical RIA methods use open-ended questions (such as “What are the costs of regulation? How can they be measured?”) that are answered by choosing the impacts of interest, selecting metrics, collecting relevant data, organizing data through a methodology, and presenting the results. This RIA approach has the strength of being highly flexible across a huge range of policy questions. In the right hands, this approach can produce high quality analysis. However, it is difficult to apply because it is vulnerable to analyst bias, requires a high level of skill to carry out and use, and demands access to a substantial amount of novel and relevant data.

- A focus on costs and benefits rather than need for government intervention. The focus on comparing solutions through valuation of impacts rather than fundamental principles of “choosing the right solutions for the problem” has allowed regulators to avoid key questions (should we regulate? What role should the market play?) in favor of comparing less optimal solutions chosen through unclear and often political values.

- A focus on analysis rather than public debate. The classical RIA emphasizes quantitative answers rather than the logic and qualitative input from stakeholder consultations that can be so valuable in avoiding mistakes. There is a continual confusion about how to integrate stakeholder inputs into the formal framework of classical RIA.

- An attempt to find “the right answer” rather than to reject the worst solutions. The classical RIA tends toward hyper-rationality, a level of precision impossible to achieve with data constraints. However, most of the worst government mistakes in regulation do not require precision at all, but adherence to basic principles of market failures and government intervention.

The success and widespread application of the simple Standard Cost Model (SCM), inadequate though it is as a RIA method suitable for making sound policy decisions, demonstrates the importance of developing a RIA method that focuses on different questions, is less data-intensive, less dependent on analytical training, more integrated into stakeholder consultation as a learning method, more intuitive, and easier to implement and use in countries in the early years of RIA. This chapter recommends development of a simplified and highly structured RIA approach that can, over time, evolve into the more complex RIA that is needed to reduce the failure rate of RIA systems.

Why Does RIA Fail?

One does not have to look far to find examples of the difficulty of implementing RIA. Even in countries with several years of experience in mandatory RIA processes, quality of analysis continues to be uneven. Multiple reasons for this high rate of failure in producing acceptable RIA have been repeatedly documented, as discussed briefly below. A few examples provide the picture:

A study of 27 EU countries in 2008 concluded: In almost all cases we have examined, there is a large gap between requirements set out in official documents and actual Impact Assessment practice. In most countries we found examples of both good and bad practice, but typically assessments are narrow, partial and done at a late stage. In many countries, a large share of proposals is not formally assessed or is assessed with a ‘tick box mentality’. [2]

In a survey of RIA practice and methods across several OECD countries in 2006, Jacobs found that:

In country after country, RIA does not quantify enough impacts, and does not rigorously examine alternatives. Quantification of benefits is an enormous problem affecting the majority of RIAs in every country. Part of the reason for this seems to be a lack of investment in skills and incentives, as discussed, and part seems to be inattention to key constraints on good quality analysis, particularly the availability of good data at affordable cost. Another problem is ineffective prioritization, or targeting, of RIA resources.[3]

Some RIA failures are simply part of the normal reform process. In a jurisdiction adopting RIA, RIA quality is not a linear upward trend from day one, but actually follows a dramatic U-shaped curve. In the early years, relatively few RIAs are conducted, but are conducted under the scrutiny of a small cadre of RIA experts. This is often called the pilot phase. As RIA is integrated into general policy processes, it is carried out by a larger and larger group of people with fewer skills. That is, RIA is decentralized to institutions where capacities are weaker. In this period of expansion, the quality of RIA seems to be declining. At some stage – the consolidation stage — the training and other quality control mechanisms catch up with the expansion, and the quality of RIA should begin to rise again.[4]

But the progression of RIA through the U shape is by no means assured. Some countries remain stuck in the low quality end of the curve. Evaluations have documented many of the reasons for RIA failure. Ladegaard in 2008 wrote about “the critical importance of the institutional underpinning of RIA systems,” and it is clear that many countries have not understood the need for what this author calls “deep embedding” of the RIA method inside the policy machinery of government. Rather than being part of the drive train of policy making, RIA is in many countries simply bolted onto machinery that is pre-designed to produce low-quality legal norms. For example, one of the most common reasons for RIA failure is simply that RIA is carried out too late in the policy process, sometimes after a legal norm has been written and even approved.[5] To succeed in the long-term, regulatory quality management must become as much a part of public management as have fiscal management and human resource management.[6]

The OECD for its part identified many barriers to mainstreaming RIA in a 1997 book[7], written during the heyday of RIA adoption in OECD countries, that remains today highly relevant to new countries adopting RIA:

- Insufficient institutional support and staff with appropriate skills to conduct RIA.

- Limited knowledge and acceptance of RIA within public institutions and civil society reduces its ability to improve regulatory quality.

- Lack of reliable data necessary to ground RIA

- Lack of an orderly, evidence-based and participatory policy-making process. RIA by itself will not solve all the problems in a regulatory regime.

- Indifference by the public administration, mainly due to inertia in the political environment.

- Opposition from politicians concerned about losing control over decision-making.

- A rigid regulatory bureaucracy and vested interests that oppose reforms.

These problems should not dissuade countries from implementing RIA. Many of these barriers are normal problems in policy reforms, and can be resolved through a clear action plans based on good international practice and focused on the specific issues. What has been missing from the discussion about the difficulty of implementing RIA is the importance of the analytical methodology that is at the heart of the RIA program.

The Classical RIA Method and its Role in RIA Failure

A key reason for the failure of countries to implement RIA is the classical RIA method itself. Since the 1990s, the OECD RIA method RIA has been the mainstream approach in OECD countries and has been recommended to non-OECD countries by this author and many others.

This RIA method, called here the “classical RIA method”, has been widely adapted and takes many forms. It is founded on the concept of the Kaldor–Hicks criterion applied through the benefit-cost method, which requires that the analyst quantify, value, and compare a wide range of social welfare impacts. What happens in the RIA is that the Kaldor-Hicks test is used 1) to rank possible future scenarios (called policy options in the RIA) based on their “net welfare”, 2) to reject policies that can’t make the country better off (where benefits are not greater than costs), and 3) to select among the surviving scenarios the one projected to result in the highest net benefit. The valuation of economic resources is based on opportunity costs (proxied by market prices), while the valuation of non-economic impacts is based on many other methods such as revealed preference or the subjective weightings of multi-criteria analysis.

These concepts have been melded into a generalized policy analysis framework to create a distinctive RIA approach that is characterized by several distinct features:

Problem definition. Every RIA guide in the world instructs the analyst to begin with a problem definition that attempts to link the problems to be solved with the causes of those problems. This important step is meant to determine where and how government action might contribute to a solution. It is universally described as the most important part of the RIA, since 1) logically, no problem can be solved unless its causes are clearly understood; and 2) many problems should not be solved by governments, but by markets, and the problem definition should identify where this is true. The European Commission 2009 Impact Assessment guidance sensibly requires that the RIA “should explain why it is a problem, why the existing or evolving situation is not sustainable, and why public intervention may be necessary.”

Yet the problem definition is the most neglected part of most RIAs. Clarity about the causes of problems runs head-on into the controlling regulatory cultures of most ministries since they assume that governments must act, since many problems are caused by government failures that they prefer not to identify, and since they by habit immediately focus on solutions rather than diagnostics of problems. The problem definition typically becomes a self-serving statement about a general problem that justifies government action, without any logical format or inquiry into market failures. Sometimes, the problem is defined as the lack of government regulation (the problem is that there is no licensing requirement….), a logical fallacy in which the RIA begins where it should be ending.

Developing a baseline scenario. Another important analytical element of the RIA is the baseline scenario. The baseline scenario is meant to provide the counterfactual against which all other scenarios are compared. It is needed because the measurement of impacts in the RIA is a marginal measurement, that is, the RIA shows the differences in impacts between the baseline scenario and the scenario being analyzed. Without a clear baseline scenario, the marginal impacts of the other scenarios are not clear and cannot be compared.

The baseline scenario is the part of the RIA where ex poste evaluation is incorporated into the ex ante projections of the rest of the RIA. This is because the baseline is typically a projection into the future of past trends in the problem.

The baseline is also typically neglected, because regulators have normally not identified the problem sufficiently clearly to enable them to choose a metric for measurement that would permit them to evaluate past trends or make projections. Nor do they have enough data to quantify the problem or establish a trend line. This is unfortunate, because the baseline is where regulators are held accountable for their problem definition.

Choosing solutions to assess in the RIA. The value of the RIA depends on choosing a range of feasible policy scenarios to assess and then comparing them along the right dimensions. The OECD is typical in writing RIA guidance that sets out the optimal RIA approach: RIA “is based on determining the underlying regulatory objectives sought and identifying all the policy interventions that are capable of achieving them.”

This is intended to be the most innovative part of the RIA, because it encourages the regulator to consider non-traditional policy instruments that might be unfamiliar. The US RIA guidance is specific that innovative approaches should be considered: “You should describe the alternatives available to you and the reasons for choosing one alternative over another. As noted previously, alternatives that rely on incentives and offer increased flexibility are often more cost-effective than more prescriptive approaches. For instance, user fees and information dissemination may be good alternatives to direct command-and-control regulation.”[8]

Yet this part of the RIA has been shown to be highly vulnerable to selection bias. Regulators usually do not assess alternatives, such as non-regulatory approaches, that they do not favor for reasons other than analysis. Political instructions sometimes narrow alternatives at a very early stage, and civil servants run a risk in including options not chosen by the minister. Assessing a wide range of options also runs squarely against the risk-averse culture of government bureaucracy. Unfamiliar alternatives are almost never included because the risk of failure seems high, continuing the paralyzing cycle in which regulators choose alternatives based on what they have done before, rather than on what might work better.

Choosing the method in the RIA. “‘Feasible alternatives’ must be assessed through the filter of an analytic method to inform decision-makers about the effectiveness and efficiency of different options and enable the most effective and efficient options to be systematically chosen.”[9] This step is also the source of confusion in RIA application. While the original concepts of RIA are based on cost-benefit analysis, over 20 analytical methods are currently used in RIAs, and more are added every year. Jacobs identified in 2006 five categories of analytical methods used in RIA programs:

- forms of benefit-cost analysis, integrated impact analysis (IIA) and sustainability impact analysis (SIA) to integrate issues into broad analytical frameworks that can demonstrate net effects and trade-offs among multiple policy objectives;

- forms of cost-effectiveness analysis based on comparison of alternatives to find lowest cost solutions to produce specific outcomes;

- a range of partial analyses such as SME tests, administrative burden estimates, business impact tests and other analyses of effects on specified groups and stemming from certain kinds of regulatory costs;

- risk assessment, aimed at characterizing the probability of outcomes as a result of specified inputs;

- various forms of sensitivity or uncertainty analysis that project the likelihood of a range of possible outcomes due to estimation errors. Uncertainty analysis is used to provide policymakers with a more accurate understanding of the likelihood of impacts.

Often, the rationale for the methods used in a RIA are not clearly presented, while RIA guides provide wide discretion to the analyst without much actual guidance, resulting in confusion on the ground. Instead of focusing on methods, RIA guides ask analysts to include a range of impacts, which, if examined closely, actually require different methods that are not themselves explained. For example, partial RIA tests such as effects on small businesses or on particular groups such as “poverty” or “gender” impacts require distributional analysis, while general “economic” and “social” impacts require benefit-cost tests, and efficiency or lowest-cost tests require cost-effectiveness analysis. Some countries even require analyses (trade or job or gender impacts) without any clear method at all.

Even if the method or methods to be used to answer the key questions are clear, the conclusions of these various methods might conflict (for example, the option with the highest net benefit might also be the option with highest cost to SMEs), and conflicts are rarely dealt with explicitly.

Identifying, applying, and integrating these various methods into a clear RIA would stress the skills of even highly trained analysts, much less new RIA analysts on the ground. As a result, RIAs in many countries tend to be a selection of information (often, the information most easily obtained) presented without clear methods. These RIAs take on the form of a kind of a qualitative or legal argument or even a persuasive essay in which the reader is coached to arrive at conclusions with little understanding of real impacts or choices.

These are not just “teething” problems. Even the country with the longest and most intensive use of RIA is plagued by these kinds of issues. A recent paper on US RIA finds that “benefit-cost analysis has evolved into a tool that does little to inform decisions on regulatory policy. Analyses either omit consideration of meaningful alternatives or are so detailed that they become practically indecipherable (or both). And in either case they are habitually completed after a policy alternative is selected.”[10] The author calls for “a noticeably simpler analysis, which we call “back-of-the-envelope” (or BOTE) analysis, conducted much earlier in the regulatory process… [that] eschews the detailed monetization and complex quantification that bedevils most current benefit-cost analyses.”

Toward a Simpler and More Effective RIA Method

These kinds of problems suggest that, as part of the larger challenge of providing an institutional framework for governments struggling with RIA, reformers interested in RIA should also focus on simplifying and improving the RIA method. Scott Jacobs wrote in 1997 that “RIA’s most important contribution to the quality of decisions is not the precision of the calculations used, but the action of analyzing– questioning, understanding real-world impacts and exploring assumptions”,[11] a sentence that has been repeatedly cited since. That statement provides some directions for improving the RIA method.

A clear view of incentives inside government bureaucracies is needed as the basis for restructuring the classical RIA method. Jacobs and Ladegaard wrote in 2010 that “incentives in public administrations are rarely supportive of reform. The quality characteristics of a good regulatory governance system do not arise spontaneously—indeed, the incentives for quality are perverse in most regulatory systems due to fragmented government institutions, career incentives, short time horizons, revenue goals, risk aversion, and capture.”[12] The classical RIA method can be easily sabotaged by these kinds of perverse incentives. While flexible, it is unstructured, allowing considerable discretion in contexts where clear and honest analysis is often not in the interest of the regulatory authority.

There will never be a single one-size-fits all RIA method. RIA efforts must be scaled to the capacities of the country. For governments with cadres of well-trained analysts and effective oversight mechanisms, the classical RIA method will serve them well. For governments beginning with RIA, several reforms might be considered:

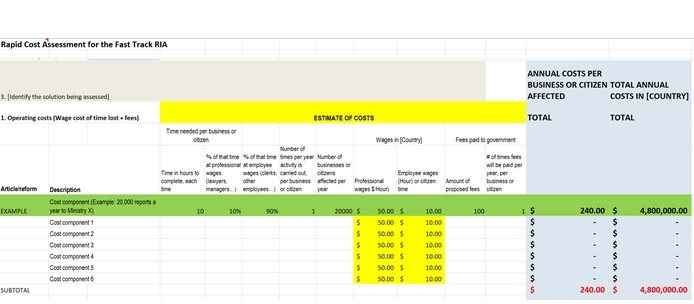

- Replace open-ended questions with structured templates and checklists: The open-ended questions (such as “What are the costs of regulation? What are the benefits? How can they be measured?”) of classical RIA should be replaced by much more standardized and focused questions aimed at the key questions to be answered. Already, governments are moving in this direction. The “standard” cost model was an early effort to hugely simplify calculation of some regulatory costs into a single formula. The Italian government produced a simplified IA template.[13] The Austrian government has produced a detailed online IA system, and the United Kingdom government has produced a RIA model in the form of an Excel. The World Bank produced a useful report on “Light RIA”, although it does not take the approach suggested here.[14] Figure 1 below shows an example of a JC&A rapid cost assessment model used to produce RIA.

Figure 1: Example of Customized Cost Assessment Excel Model for RIA

- Place much more emphasis on the need for and form of government intervention rather than on calculating costs and benefits of options. Most countries in transition from cultures of control to cultures of market regulation regulate far too much or through means that are unlikely to be effective or efficiency. Regulators intervene in markets without a clear idea of why or how or when. Calculation of costs and benefits do not prevent these costly mistakes. Because of perverse incentives and a distrust of the relevance of the RIA, regulators do not wait to see the quantitative results before they make these decisions. By the time the RIA analyst begins to calculate these impacts, the basic decisions on whether and how to regulate have often already been made. Costs and benefits are estimated for less optimal solutions. Much of the time and effort in the RIA should be re-allocated from the cost and benefit calculations to the problem definition statement coupled with the baseline evaluation of how the problem has evolved over time. The current focus on choosing solutions by comparing valuation of impacts should be changed to apply fundamental principles of “choosing the right solutions for the problem”.

In doing this, the problem definition and baseline statements should be useful tools in answering basic questions about the need for government action. Regulators should explicitly answer key questions such as “Should we regulate?” “What role should the market play?” “What kind of government intervention is most suited to that situation?” by following a structured decision tree or similar method. A range of decision methods can be used to simplify the task of choosing appropriate solutions and eliminating damaging regulatory designs without carrying out benefit-cost analysis.The United States, for example, has adopted a “rule of thumb” approach by warning regulators, ex ante, that regulatory designs that replace the market should not be used: “a particularly demanding burden of proof is required to demonstrate the need for any of the following types of regulations:

-

- Price controls in competitive markets

- Production or sales quotas in competitive markets

- Mandatory uniform quality standards for goods or services if the potential problem can be adequately dealt with through voluntary standards or by disclosing information of the hazard to buyers or users

- Controls on entry into employment or production, except (a) where indispensable to protect health and safety (e.g., FAA tests for commercial pilots) or (b) to manage the use of common property resources (e.g., fisheries, airwaves, Federal lands, and offshore areas)

- Use stakeholder consultation, rather than only quantitative tables, to check the reasonableness of regulatory solutions. While the classical RIA method does require that stakeholders have input into the RIA, in practice governments have not integrated consultation and RIA very well. Confusion about how to integrate stakeholder inputs into the formal framework of classical RIA is widespread. This is often a methodological problem of integrating quantitative and qualitative information, and often a political problem of interpreting values expressed through consultation. As a result, undue attention is placed on collecting data of often dubious reliability, and too little on exploiting the information held by stakeholders. At its most technical, RIA can create another barrier to participation by groups without quantitative skills, the opposite of the transparency that RIA was originally intended to bring to policy development.